Surviving the front page of HackerNews on a 50 Mbps uplink

Around a month ago I shared my blog post on HackerNews. I guess I lucked out with the choice of the topic, because it brought out a lot of enthusiasts who shared their own experiences with older machinery that still works in 2022. I really appreciate the feedback and the experiences shared!

Anyway, what is noteworthy in my opinion is that my blog runs off of a residential connection that has an upload speed limit of 50 Mbps. Once I noticed the post getting traction, I was worried for a moment. It’s not a rare sight to see a post on the front page of HackerNews and see it being down due to all the attention it got.

Somehow, my post managed to weather the storm. Here’s what happened.

Analyzing the logs

My page doesn’t have any sort of first-party or third-party analytics software

running. Tracking users across the web is a big no-go for me and I will live by

that, especially on my website. I do have nginx logs, though.

After a quick look around, I found goaccess, a tool that can parse nginx

logs and put together some basic statistics. I found it to be good enough for my

purposes. Here are some notable statistics.

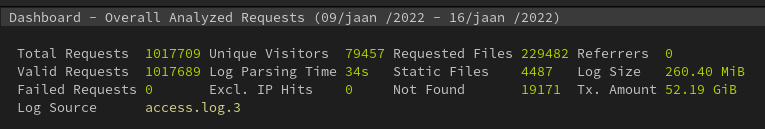

To understand the HackerNews effect, note that the post was published on 2022-01-10 07:42. These logs also include requests towards services that I host myself.

The summary of the week during which one of my posts got popular on HN, as

reported by goaccess, looks like this.

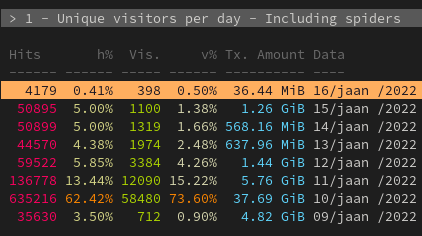

During quiet days, nginx logs around 50K requests a day. On 10th of January, there was a 12x increase. The effect was also noticeable the next day, during which I assume people caught up with their 100+ tabs that they usually have open. This may also be down to the post being shared around as well.

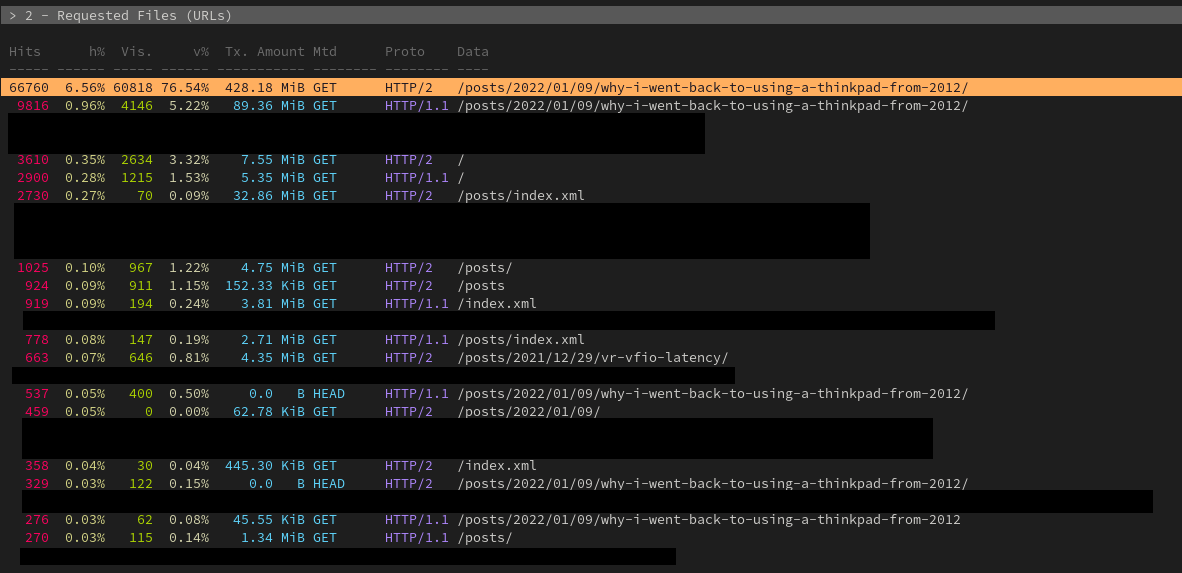

Based on this statistic, it seems that the post got around 75K views, either by real people, crawlers or preview generators.

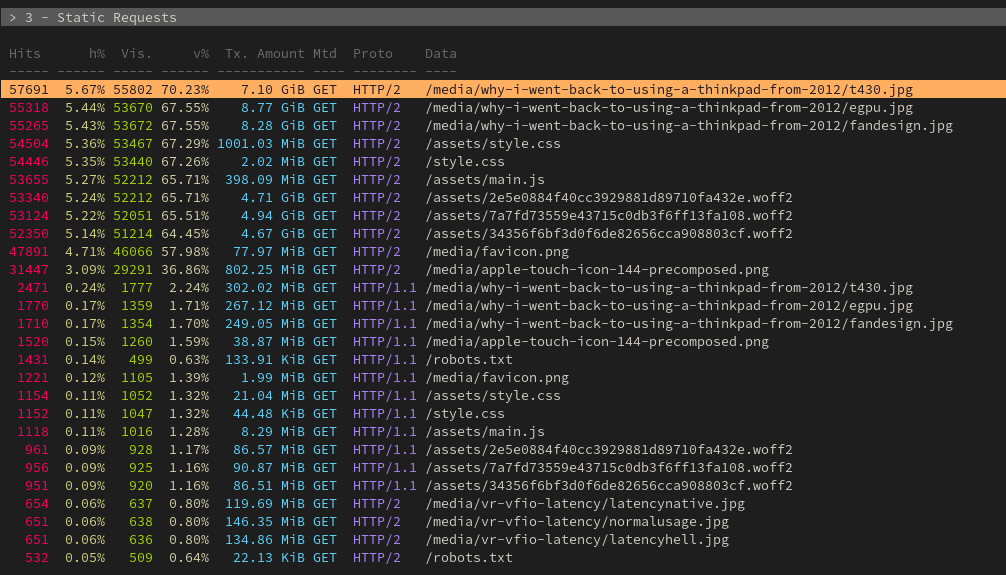

When we look at the number of requests made towards specific assets, such as the images in the post, we can see that real impressions are likely to be around 55K. The first image has been downloaded more than the other two, likely indicating that it was used as the preview image when the article was linked on other sites.

This statistic also highlights a surprisingly large cost of web fonts.

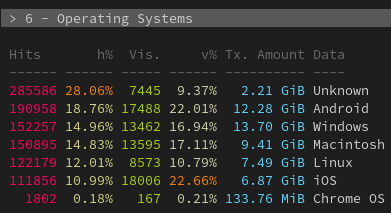

The OS results are likely to be biased, since my self-hosted services go through the same reverse proxy. The “Unknown” section is likely related to crawlers and other bots pinging my server.

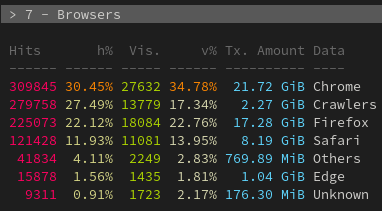

No surprise there: Chrome is the most popular browser used to reach my site. Firefox makes up a good chunk of hits as well, but a lot of those are likely requests made from my own machine.

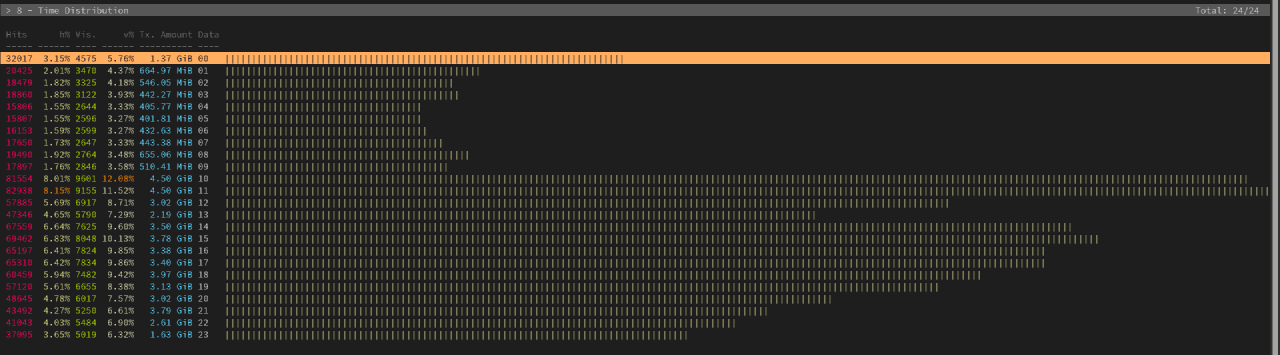

The time distribution of the requests is interesting. You can notice the background noise associated with bots and crawlers that occurs during the night. My post was posted in the morning in my local timezone (UTC +2), which was around the time EU people wake up and get to work. The other spike around 14-15:00 can likely be attributed to our friends over the Atlantic ocean.

16:00 in my local time is a bit special. A lot of things coincide with this time:

- the review embargo for the latest highly sought after CPU/GPU is over, followed by a barrage of videos on this product in YouTube

- the stock market opens and news start rolling in about some big moves

- if Slack is having issues, then it’s around this time, because I can only assume that people get to work and do a production release at the start of the workday.

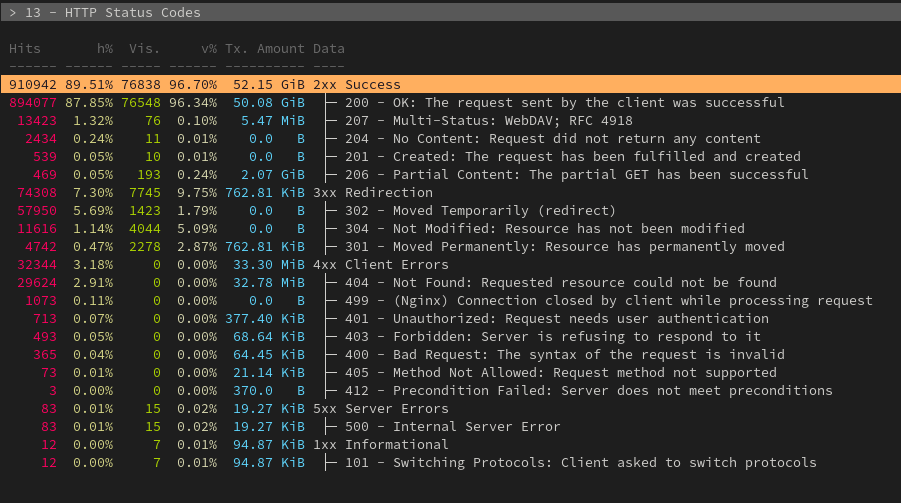

To my surprise, my server survived this and there aren’t too many issues. The 400 and 500 errors are likely attributed to bots trying to exploit my server and crawlers visiting links that are not valid any more.

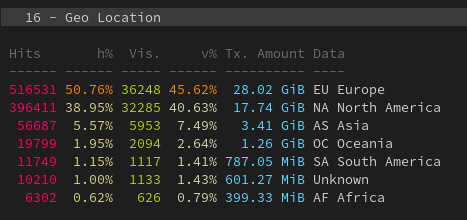

There’s likely a bias here as well regarding Europe.

How I built the blog

When I started writing blog posts regularly, I had some principles that I wanted to stick to:

- the goal should be on the writing, not the part where I build the website itself

- the site has to be static: build it once and deploy it, which should result in fewer opportunities for attacks and reduced load on the CPU

With this in mind, I decided to stick with Hugo. Hugo is

just a single binary, has plenty of themes to pick from, and it seems like a

reasonable-enough choice for a site. It’s not the easiest to use as you’ll be

mainly operating in Markdown, and with the theme that I use, I have to

copy-paste a <figure> section, change the image name that it points to, and

make sure that I didn’t mess anything up. However, because this approach rules

out me getting hit by the hottest Wordpress plugin vulnerability of the week, I

think it’s a fair trade-off to make.

I picked this theme by panr and customized it so that it has a landing page of sorts as well. I haven’t updated it yet and it has some flaws, but it gets the job done, and that’s what matters to me the most.

Hosting media, such as images, seems like a no-brainer, just put them on the

page and be done with it. Pictures taken with an iPhone SE 2020 are quite big,

though (3-4 MB per image), which will result in the page loading very slowly.

To avoid images bloating the size of the page too much, I have set up a system

where I keep the original images in one folder, copy them to another one, run

mogrify -resize 1024x768 -quality 85 *.jpg to keep the images small, but still

detailed enough, and then deploy those converted images along with the rest of

the blog.

With converted images, a page with three images can fit in less than 1 MB of transferred files without any issues. With original images, the same page would require 10 MB of files to be transferred. The math is simple: with a limited uplink, optimizing the images results in your server being able to serve 10x more requests.

Residential connections and DNS

If you’re like me, then you probably have a crappy router/modem box from your local ISP, and a dynamic IP address that usually changes whenever you reboot said box. This presents a challenge when you try to host anything from your residential connection since the IP address could change at any time.

To resolve this, there are two options I’m aware of: dynamic DNS providers, or setting up a script that talks to your DNS provider over a standard API.

I haven’t personally used any dynamic DNS providers, such as DuckDNS, mainly because my domain registrar has a handy API that I can use to automatically update my IP address with any time it changes. And yes, I did have an issue with the script where I triggered a change every minute, resulting in an angry e-mail being sent to me. Free tech tip: only propagate changes when the IP address actually changes.

There’s one downside with this issue: you can set your domain TTL (time-to-live) low, but no matter how low you set it, there will be a period of time after an IP address change where some DNS servers will point to your old IP address. This is an availability risk you have to consider when setting up a service on a dynamic IP address.

Future steps

At one point I took a look a the assets of the Hugo theme that I use and noticed

that it includes a dependency named prism.js, which might be a good thing to

include if you want to display code snippets with syntax highlighting. My issue

with it was that it was included on every page load and took up a significant

chunk of transferred data. I decided to remove it, and just like that, the page

loads even faster.

There’s still room for improvement. The page currently also includes some custom fonts. If I decide that a built-in font is good enough, then there’s potential for an additional 0.3 MB of savings.

The web is bloated enough already, but at least I can control what I send to the client machines on my website.

Conclusion

If you don’t want to go through all the hassle and just want a website up and running, just get yourself a cheap virtual machine at a cloud provider, or use something like Github Pages. Those solutions are less likely to hit limits with bandwidth.

If you like tinkering and the decentralized, self-hosted web to exist (not Web 3.0!), then feel free to use this post as inspiration for your very own website. There’s a lot that you can do with limited resources, and it’s fun to push the limit.

And as a quick tech tip: you can use The Wayback Machine as an insurance policy for your website. If you are concerned about it going down, have them take a snapshot of it and link it somewhere in a comment.

Subscribe to new posts via the RSS feed.

Not sure what RSS is, or how to get started? Check this guide!

You can reach me via e-mail or LinkedIn.

If you liked this post, consider sharing it!