Why you might not want to publicly self-host a Wikipedia clone

A while ago I wrote about how easy it is to download an archive of Wikipedia and host it anywhere you want using Kiwix.

Here are a few things you might want to consider before doing so yourself.

The spam

I have a specific e-mail address set up so that readers of my blog can reach out to me no matter where they see my post. I knew about the risk of spam, but receiving genuine feedback and questions is something I appreciate a lot and I’m willing to mark everything else as spam if needed.

What I did not expect was the amount of spam that originates from my self-hosted copy of Wikipedia, or that they’d

use the e-mail that is present on the main ounapuu.ee domain.

The scheme seems to work like this:

- crawl the web

- find links to genuine sources that have broken (HTTP 404 errors)

- spam the owner of the site with a politely worded e-mail template that asks you to fix those broken links with these new ones that link to some marketing garbage or SEO spam

- go to step 0

And the worst part is that this probably works for a lot of smaller sites with non-technical owners.

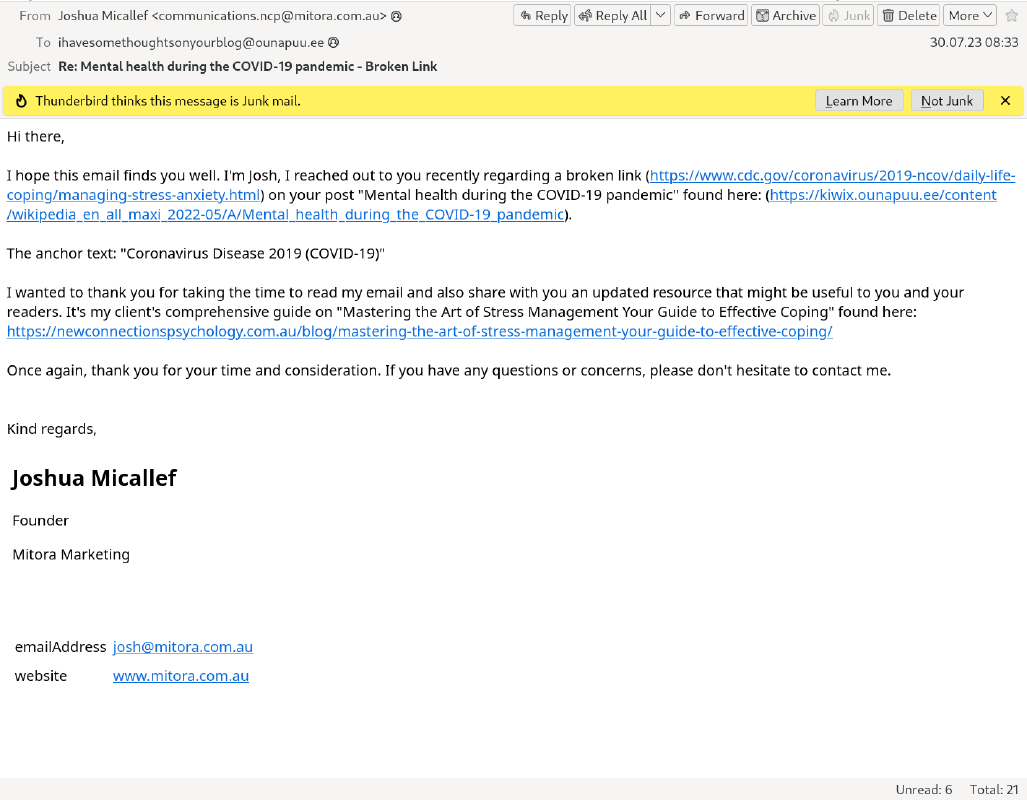

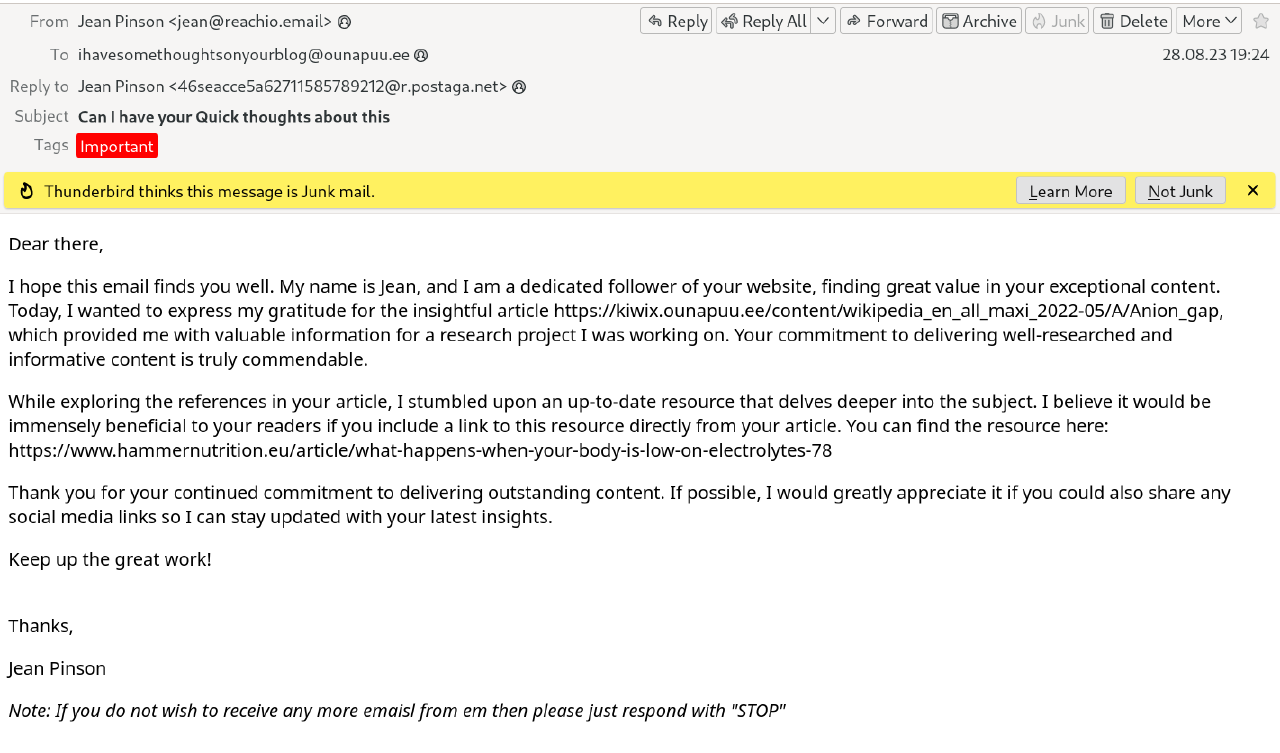

I’ve deleted most of the spam but have some recent examples (click on the image to see the higher quality image).

Wikipedia has a lot of broken links and since my copy of it will always be a little bit out of date, I will probably keep receiving spam like this until the end of time.

Security

Turns out that the version of Kiwix that you can sudo apt install in Ubuntu Server 22.04 LTS is really out of date.

I learned about that when CERT-EE

reached out to me about an XSS vulnerability that Kiwix had.

If you’re running a Kiwix version older than 12.0.0, then congratulations, you have this vulnerability!

If your distro ships the latest version (Arch Linux, Fedora Linux etc.), then you’re good to go!

If your distro doesn’t, then feel free to use the Kiwix Docker images to get around that limitation.

A very basic example command that should get you started:

docker run --rm \

--name=kiwix \

-v /path/to/content/:/data \

ghcr.io/kiwix/kiwix-serve:latest \

/data/*.zim

2024-09-05 update: LLM bots!

Well, it turns out that Kiwix is horribly inefficient at disk usage when LLM data scrapers are hitting it at 50+ requests per second.

The following is the result of running Kiwix for 4 days. Over 8 TB of disk reads is incredibly wasteful.

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

734d8e1b4385 kiwix 50.28% 10.36GiB / 27.27GiB 38.00% 7.73GB / 94.7GB 8.45TB / 12.9GB 7

I’ve decided to finally take down my instance of kiwix.ounapuu.ee as a result.

Subscribe to new posts via the RSS feed.

Not sure what RSS is, or how to get started? Check this guide!

You can reach me via e-mail or LinkedIn.

If you liked this post, consider sharing it!